In today’s interconnected world, managing cybersecurity across multiple tenants is both a challenge and an opportunity. For organizations and Managed Service Providers (MSPs) working with countless customers—each with their own Azure and Microsoft 365 tenant—Microsoft Sentinel stands out as a powerful tool for ensuring robust security. Whether you’re scaling Sentinel deployments across distributed tenants or centralizing data collection into a single workspace, each approach comes with unique benefits and trade-offs.

This post describes Microsoft Sentinel strategies for multi-tenant environments. We’ll explore distributed setups that empower customer-specific management, centralized models that simplify administration and cost management, and a hybrid model that combines the strengths of both approaches. By the end, you’ll have a clear roadmap for leveraging Microsoft Sentinel to master multi-tenant security, regardless of your scenario.

Overview About Design Principles

Prior to delving into the specific scenarios, it is imperative to establish some fundamental design principles that guide the planning of the Microsoft Sentinel workspace architecture. These principles are outlined in detail in the Microsoft Sentinel best practices documentation.

| Criteria | Description |

| Operational and security data | You may choose to combine operational data from Azure Monitor in the same workspace as security data from Microsoft Sentinel or separate each into their own workspace. Combining them gives you better visibility across all your data, while your security standards might require separating them so that your security team has a dedicated workspace. You may also have cost implications to each strategy. |

| Azure tenants | If you have multiple Azure tenants, you’ll usually create a workspace in each one. Several data sources can only send monitoring data to a workspace in the same Azure tenant. |

| Azure regions | Each workspace resides in a particular Azure region. You might have regulatory or compliance requirements to store data in specific locations. |

| Data ownership | You might choose to create separate workspaces to define data ownership. For example, you might create workspaces by subsidiaries or affiliated companies. |

| Split billing | By placing workspaces in separate subscriptions, they can be billed to different parties. |

| Data retention | You can set different retention settings for each workspace and each table in a workspace. You need a separate workspace if you require different retention settings for different resources that send data to the same tables. |

| Commitment tiers | Commitment tiers allow you to reduce your ingestion cost by committing to a minimum amount of daily data in a single workspace. |

| Legacy agent limitations | Legacy virtual machine agents have limitations on the number of workspaces they can connect to. |

| Data access control | Configure access to the workspace and to different tables and data from different resources. |

| Resilience | To ensure that data in your workspace is available in the event of a region failure, you can ingest data into multiple workspaces in different regions. |

In contrast, the criteria Azure tenants lacks specific details regarding the limitation that several data sources can only send monitoring data to a workspace within the same Azure tenant. This blog will provide an explanation of the design principles, advantages, and disadvantages for both scenarios, with a primary focus on the integration of Microsoft Defender XDR.

Scenario 1: Distributed Microsoft Sentinel Setup Across Customer Tenants

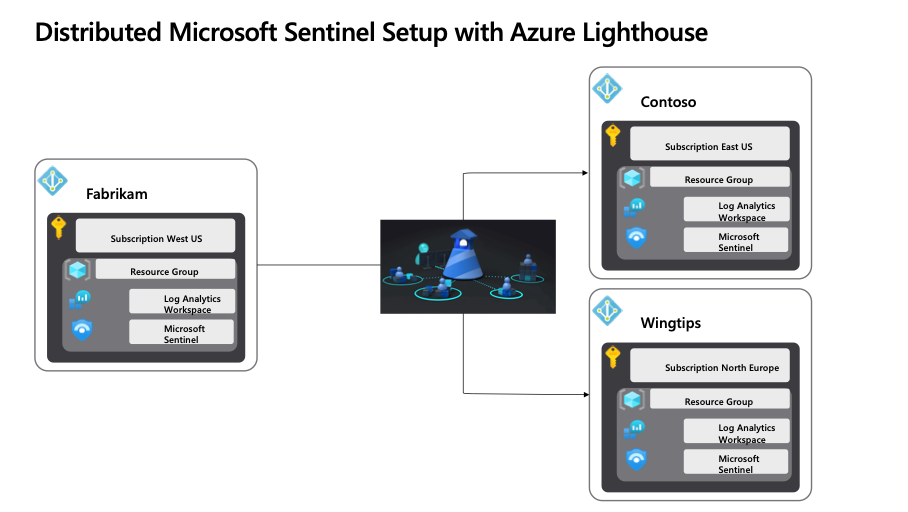

Let’s begin with the distributed Microsoft Sentinel setup, which, as of the date of this blog post, is the recommended and best practice architecture. The accompanying illustration depicts three organizations named Contoso and Wingtips, each with Azure and Microsoft 365, as part of a global company called Fabrikam. Fabrikam aims to establish a global SOC and connect both tenants and log files from Contoso and Wingtips.

Figure 1: Distributed Microsoft Sentinel Setup with Azure Lighthouse

In a distributed architecture, a Log Analytics workspace is established within each Azure tenant. This option is the sole viable choice for monitoring Azure services beyond virtual machines. With Azure Lighthouse, administrators can then access each customer’s workspaces from within their own service provider tenant instead of having to sign in to each customer’s tenant individually.

Advantages to this strategy:

- • Logs can be sourced from various resources, which is particularly advantageous in the context of Microsoft XDR. This bidirectional synchronization capability enables the establishment of alerts and incident synchronization, enhancing the overall system’s capabilities.

- Azure delegated resource management allows Contoso and Wingtips to confirm specific levels of permissions. Alternatively, both can manage access to logs using their own Azure RBAC.

- Contoso and Wingtips can customize their workspace settings, including retention and data cap policies. Regulatory and compliance isolation is also provided. The charge for each workspace is included in the origin tenant subscription bill.

Disadvantages to this strategy:

- Running a query across a large number of workspaces is slow and can’t scale beyond 100 workspaces. This means that you can create a central visualization and data analytics system, but it will become slow if there are more than a few dozen workspaces.

- If Contoso and Wingtips aren’t onboarded for Azure delegated resource management, Fabrikam administrators must be provisioned in the customer directory. This requirement makes it more challenging for Fabrikam to manage multiple customer tenants simultaneously.

- When running a query on a workspace, the workspace administrators may have access to the complete text of the query through query audit.

The distributed scenario is straightforward and does not need any additional explanation. You can follow the Sentinel deployment best practices guide and attach Azure Lighthouse to it.

Scenario 2: Centralized Microsoft Sentinel Setup Across Customer Tenants

Next, let’s delve deeper into the centralized Microsoft Sentinel setup and examine its advantages, disadvantages, and potential solutions. The accompanying illustration illustrates the same three organizations, Contoso and Wingtips, as in the dedicated scenario, each with Azure and Microsoft 365 as part of a global company called Fabrikam. Fabrikam’s objective is to establish a global SOC and connect both tenants and log files from Contoso and Wingtips.

A single workspace with Microsoft Sentinel attached is created in the Fabkrikam’s subscription. This option can collect data from Contoso and Wingtips virtual machines and Azure PaaS services based on diagnostics settings. Agents installed on the virtual machines and PaaS services can be configured to send their logs to this central workspace.

But there are some 1st party data connectors which are not natively supported across tenants:

- Microsoft 365 Defender connectors (like Defender for Endpoint, Defender for Office 365, and Defender for Identity) are generally limited to their own tenant boundaries.

- Entra ID (AAD) and Office 365 connectors also face cross-tenant restrictions. However, custom solutions using APIs (like the Office 365 Management API or Microsoft Graph API) can be implemented to work around these limitations.

Note: I personally wouldn’t recommend using APIs to pull data from various Microsoft 365 Defender tenants to a single Microsoft Sentinel instance, especially since the new unified security operations (SecOps) platformoffers a wealth of new and enhanced capabilities for managing XDR and SIEM.

However, there are managed service providers and customers who prefer not to deploy and configure their own resources and instances of Sentinel, along with its prerequisites, as this also entails management and operational effort, which ultimately leads to additional costs. If you’re looking to centralize logs from multiple tenants into one workspace, direct ingestion isn’t possible with the native configuration. Instead, you can:

- Use custom APIs like Microsoft Graph or the Office 365 Management API to collect data and push it into a centralized workspace.

- Leverage Logic Apps or Azure Functions to automate data collection from multiple tenants and centralize them into one workspace.

2.1. Use Custom APIs to Collect Data and Push it to Sentinel

This section outlines a comprehensive guide on configuring and deploying an illustrative solution for data collection (pull) from XDR and data transmission (push) to Sentinel.

2.1.1. Set Up Microsoft Graph API

- Register an application in Entra ID for each tenant you want to pull data from.

- Assign the necessary permissions: SecurityAlert.Read.All and SecurityIncident.Read.All for accessing security alerts and incidents.

- Generate the Client ID, Tenant ID, and Client Secret.

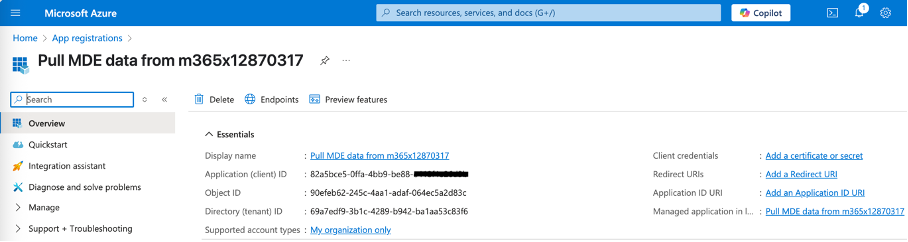

In this example, I’m going to register the application in the tenant m365x12870317.onmicrosoft.com to pull the data.

Figure 2: Entra ID App Registration

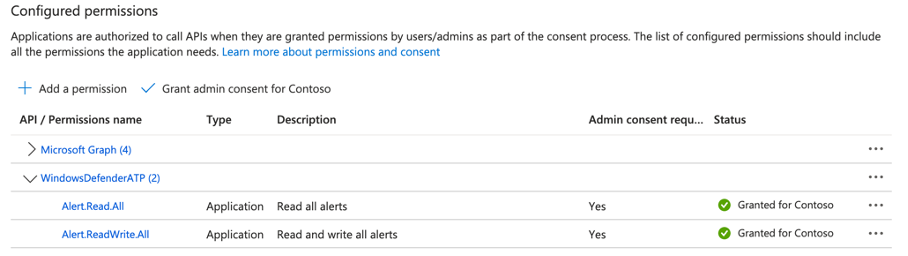

Assign the necessary permissions: Alert.Read.All and Alert.ReadWrite.All for accessing security alerts (and sample alert creating in case you want to test it).

- Assign the necessary permissions: Alert.Read.All and Alert.ReadWrite.All for accessing security alerts (and sample alert creating in case you want to test it).

Figure 3: Entra ID App Registration Graph API Permissions

Generate the Client ID, Tenant ID, and Client Secret.

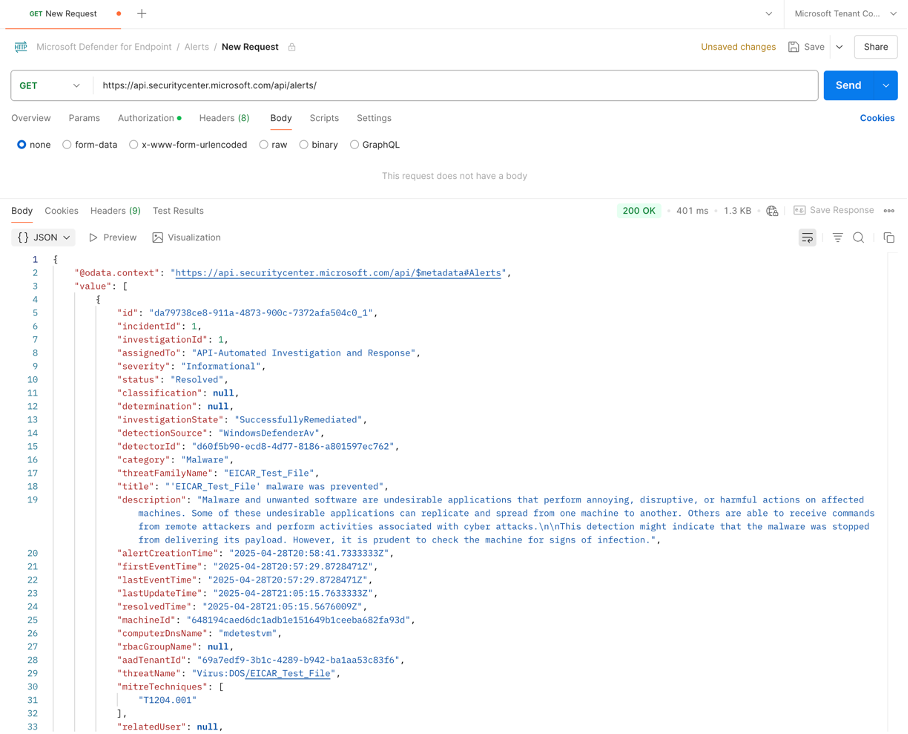

Note: To verify the permissions and connectivity, utilize an API testing tool such as Postman. This tool also enables you to access all parameters for specific alerts, facilitating the definition of the Azure Logic App in the subsequent step.

Figure 4: GET request to XDR Alerts with Postman

2.1.2. Fetch data from Defender for Endpoint

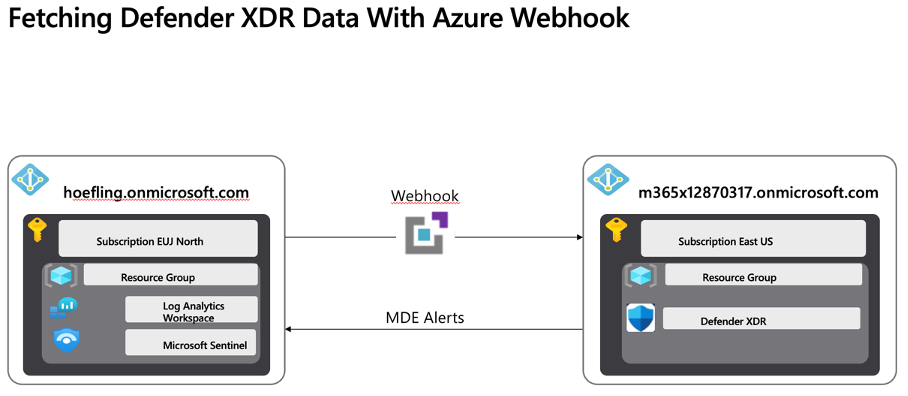

To retrieve data from the centralized Sentinel instance or tenant, various methods and tooling options are available, including Azure Webhooks (as outlined in this blog post with Azure Logic Apps), Azure Functions, and custom development endeavors such as Python programming.

Azure Webhooks offer a significant advantage by enabling HTTP callbacks that trigger actions in response to specific events. This eliminates the need to repeatedly call an API at fixed intervals to retrieve Defender XDR alert details. This mechanism provides greater convenience and reduces resource consumption.

For a better overview of what we are going to achieve, the following illustration explains the process:

Figure 5: Fetching Defender XDR Data With Azure Webhook

- Create an Azure Logic App

- Add a stateful HTTP Webhook Trigger

Choose “When an HTTP request is received”.

Copy the Webhook URL (it will be used as notificationUrl in your Microsoft Graph subscription.)

{

"definition": {

"$schema": "https://schema.management.azure.com/providers/Microsoft.Logic/schemas/2016-06-01/workflowdefinition.json#",

"contentVersion": "1.0.0.0",

"parameters": {},

"triggers": {

"When_a_HTTP_request_is_received": {

"type": "Request",

"kind": "Http",

"inputs": {

"schema": {

"type": "object",

"properties": {

"validationToken": { "type": "string" },

"value": {

"type": "array",

"items": {

"type": "object"

}

}

}

}

}

}

},

"actions": {

"Check_validationToken": {

"type": "If",

"expression": {

"not": [

{

"equals": [

"@triggerBody()?['validationToken']",

null

]

}

]

},

"actions": {

"Respond_with_token": {

"type": "Response",

"inputs": {

"statusCode": 200,

"body": "@triggerBody()?['validationToken']"

}

}

},

"else": {

"actions": {

"For_each_alert": {

"type": "Foreach",

"foreach": "@triggerBody()?['value']",

"actions": {

"Get_Token": {

"type": "Http",

"inputs": {

"method": "POST",

"uri": "https://login.microsoftonline.com/@{parameters('tenant_id')}/oauth2/v2.0/token",

"headers": {

"Content-Type": "application/x-www-form-urlencoded"

},

"body": "client_id=@{parameters('client_id')}&scope=https%3A%2F%2Fgraph.microsoft.com%2F.default&client_secret=@{parameters('client_secret')}&grant_type=client_credentials"

},

"runAfter": {}

},

"Parse_Token": {

"type": "ParseJson",

"inputs": {

"content": "@body('Get_Token')",

"schema": {

"type": "object",

"properties": {

"access_token": {

"type": "string"

}

}

}

}

},

"Get_Full_Alert": {

"type": "Http",

"inputs": {

"method": "GET",

"uri": "https://graph.microsoft.com/v1.0/security/alerts/@{items('For_each_alert')?['id']}",

"headers": {

"Authorization": "Bearer @{body('Parse_Token')?['access_token']}"

}

}

},

"Get_Sentinel_Signature": {

"type": "Http",

"inputs": {

"method": "POST",

"uri": "https://<your-function-app>.azurewebsites.net/api/GenerateSentinelSignature",

"headers": {

"Content-Type": "application/json"

},

"body": {

"body": "@string(body('Get_Full_Alert'))",

"date": "@utcNow()",

"workspaceId": "@parameters('workspace_id')",

"sharedKey": "@parameters('shared_key')"

}

}

},

"Send_to_Sentinel": {

"type": "Http",

"inputs": {

"method": "POST",

"uri": "https://@{parameters('workspace_id')}.ods.opinsights.azure.com/api/logs?api-version=2016-04-01",

"headers": {

"Content-Type": "application/json",

"Log-Type": "@parameters('log_type')",

"x-ms-date": "@utcNow()",

"Authorization": "@body('Get_Sentinel_Signature')"

},

"body": "@body('Get_Full_Alert')"

}

}

}

}

}

}

}

}

},

"kind": "Stateful",

"parameters": {

"tenant_id": {

"type": "string",

"defaultValue": "<your-tenant-id>"

},

"client_id": {

"type": "string",

"defaultValue": "<your-client-id>"

},

"client_secret": {

"type": "string",

"defaultValue": "<your-client-secret>"

},

"workspace_id": {

"type": "string",

"defaultValue": "<your-loganalytics-workspace-id>"

},

"shared_key": {

"type": "string",

"defaultValue": "<your-shared-key>"

},

"log_type": {

"type": "string",

"defaultValue": "DefenderAlerts"

}

}

}Create an Azure Function which generate the required HMAC-SHA256 signature for Sentinel

using System;

using System.IO;

using System.Text;

using System.Security.Cryptography;

using System.Threading.Tasks;

using Microsoft.AspNetCore.Mvc;

using Microsoft.Azure.WebJobs;

using Microsoft.Azure.WebJobs.Extensions.Http;

using Microsoft.AspNetCore.Http;

using Newtonsoft.Json;

public static class GenerateSentinelSignature

{

[FunctionName("GenerateSentinelSignature")]

public static async Task<IActionResult> Run(

[HttpTrigger(AuthorizationLevel.Function, "post", Route = null)] HttpRequest req)

{

var requestBody = await new StreamReader(req.Body).ReadToEndAsync();

dynamic data = JsonConvert.DeserializeObject(requestBody);

string body = data?.body;

string date = data?.date;

string workspaceId = data?.workspaceId;

string sharedKey = data?.sharedKey;

string stringToSign = $"POST\n{Encoding.UTF8.GetByteCount(body)}\napplication/json\nx-ms-date:{date}\n/api/logs";

byte[] keyBytes = Convert.FromBase64String(sharedKey);

byte[] messageBytes = Encoding.ASCII.GetBytes(stringToSign);

using (var hmacSha256 = new HMACSHA256(keyBytes))

{

byte[] hash = hmacSha256.ComputeHash(messageBytes);

string signature = Convert.ToBase64String(hash);

string authorization = $"SharedKey {workspaceId}:{signature}";

return new OkObjectResult(authorization);

}

}

}

- Create a Microsoft Graph subscription:

You’ll need to create a Microsoft Graph subscription for Defender for Endpoint alerts. You can do this via Microsoft Graph API. Example request to create a subscription:

POST https://graph.microsoft.com/v1.0/subscriptions

Content-Type: application/json

Authorization: Bearer <access_token>

Subscription Body Example

{

"changeType": "created",

"notificationUrl": "https://<your-logic-app-url>",

"resource": "security/alerts",

"expirationDateTime": "2025-05-30T00:00:00Z",

"clientState": "yourCustomSecret"

}

Once the alert reaches Sentinel, you can view it and set up response to future alerts. To verify, open the Log Analytics workspace, and run the following KQL query:

DefenderAlerts_CL

| sort by TimeGenerated desc

Scenario 3: Hybrid Model

In a hybrid model, each tenant has its own workspace. A mechanism is used to pull data into a central location for reporting and analytics. This data could include a small number of data types or a summary of the activity, such as daily statistics. This brings the same amount of effort and configuration like the centralized scenario.

Disadvantages and Things To Consider

1. No Bi-Directional Sync (Cross-Tenant)

Since you’re moving data from Defender for Endpoint in one Azure tenant to Sentinel in another tenant, the setup is one-way. This means:

- No direct synchronization from Sentinel to Defender for Endpoint: If you need to respond to alerts or take action directly within Defender from Sentinel (e.g., marking an alert as resolved or taking a remediation action), you’ll need to set up additional workflows and tools (like an additional Logic App or Playbook).

- No event propagation: For example, if a defender action is taken in Sentinel (like a rule triggers or a custom playbook runs), you won’t automatically update Defender for Endpoint without creating another flow to handle that. Thus, this setup doesn’t allow bi-directional synchronization of alert statuses or other data.

2. Cross-Tenant Management Complexity

Managing multiple Azure tenants adds some complexity:

- Cross-tenant authentication: You’ll need to manage service principals, API permissions, and access between tenants, which can lead to additional maintenance overhead.

- Potential delays: Because of the inter-tenant communication, there could be slight delays or failures if there’s an issue with API or authentication. For example, if Microsoft Graph experiences issues or token validation fails, alerts might not be ingested into Sentinel.

- Subscription and Tenant Management: Ensuring that each tenant’s resources (alerts, logs, etc.) are managed properly might involve more administrative overhead, especially when it comes to rotating secrets, managing access, and handling permissions between tenants.

3. Dependence on External Functions (Azure Function)

You’re relying on an external Azure Function to generate the HMAC signature for authentication with Sentinel’s Log Analytics Data Collector API. This introduces several risks:

- Availability: If the Azure Function becomes unavailable (due to downtime, maintenance, or any other issue), your Logic App won’t be able to generate the correct signature and thus won’t be able to send data to Sentinel.

- Scalability: While Functions are scalable, you still need to monitor their performance and ensure that they can handle the expected load, especially if you’re processing many alerts.

- Code Maintenance: The code in the Azure Function needs to be maintained, updated, and tested periodically. Any changes in Sentinel’s API or Microsoft Graph’s API could break this setup.

4. Manual Configuration and Maintenance

The need to create and configure the Microsoft Graph subscription manually via the Graph API (or Graph Explorer) means that there is manual setup involved:

- Subscription Renewal: Graph subscriptions expire after a set period (usually 3 days), so you need a recurring task (perhaps another Logic App or automation) to renew the subscription periodically.

- Monitoring the Workflow: Although the Logic App gives you monitoring features, there is still an ongoing need to check the status of the workflow, including checking for failures in alert processing, token validation, or data posting to Sentinel.

- Cross-Service Troubleshooting: If something fails (e.g., if an alert isn’t showing up in Sentinel), you need to debug across multiple services (Microsoft Defender, Graph API, Logic Apps, Azure Functions, Sentinel).

5. Security and Compliance Considerations

- Cross-tenant data flow: Since the solution involves data flowing between two different Azure tenants, it’s essential to ensure that this setup complies with your organization’s security policies. Sensitive data might be exposed if the authentication mechanism (e.g., tokens) isn’t managed securely.

- Sensitive Data in Logs: Data passed between services (Logic Apps, Functions, Sentinel) could be subject to retention policies or log aggregation, so data privacy and compliance with regulations (e.g., GDPR, HIPAA) need to be considered carefully.

- Permissions: You must ensure that both tenants have the correct permissions configured for accessing Microsoft Defender and Sentinel data, and ensure these permissions are regularly reviewed to prevent unauthorized access.

6. Increased Latency

Since the data is being passed through a series of services (Defender -> Graph -> Logic App -> Azure Function -> Sentinel), there is inherent latency:

- Alert delay: The delay between when an alert is generated in Defender for Endpoint and when it’s received and processed in Sentinel could range from a few seconds to a few minutes, depending on the volume of alerts and the processing time.

- Compounding delays: If any step fails or experiences delays (like token validation failure, Graph API delays, or Azure Function processing), the alert may be delayed or missed.

7. Cost Implications

There are a few cost implications in this solution:

- Logic App consumption pricing: Logic Apps charge based on the number of executions, connectors used, and the frequency of the workflow. Depending on the number of alerts processed, the cost can add up over time.

- Azure Function costs: Azure Functions charge based on execution time and resource usage. If you have a high volume of Defender alerts, this could lead to higher costs for Function execution.

- Sentinel ingestion costs: Each log ingested into Microsoft Sentinel can incur costs based on the data volume. Depending on the frequency of alerts and their size, costs can increase.